❄️ It somewhat extends my previous writing, Career Snowball, written in 2019.

High interest rates shocking the real economy have been the central theme of 2023. Companies blamed the macroeconomy as they laid off employees, and we can easily feel the impacts of inflation amidst international conflicts. Such large, invisible waves have a significant effect on us commoners. Our best choice then would be to understand these waves and, like surfing, use them to our advantage.

When a surfer gets up and catches the wave and just stays there, he can go a long, long time. But if he gets off the wave, he becomes mired in shallows. – Charlie Munger

And a comparably powerful wave comes from technology. Just as we need to be

more cautious with debt when interest rates are high, riding the wave of

technology is a critical driving force in rolling out a career. For example, if

computing performance hadn’t improved steeply in the 1980s, Apple and Microsoft

might not have grown to this extent. The cloud computing of the 2010s

substantially lowered the cost of scaling and enabled the success of companies

like Stripe,

Pinterest,

and Airbnb.

Notably, those enabling innovations were often not about something incredibly

new but about increasing performance / $ or decreasing the required capital

expenditures (CAPEX) of existing technology.

This writing will demonstrate two gigantic waves influencing the 2020s and another significant wave derived from them.

- The End of Moore’s Law and Heterogeneous Supply: How computer is evolving.

- Increasingly Larger Demand: How computer usage is evolving.

- Complexity and the Solution War: How software in the middle is evolving.

Finally, to show that the large waves can be helpful not only to executives or investors but also to small individuals, I’m briefly sharing personal examples of utilizing these trends as an early-career professional.

1. The End of Moore’s Law and Heterogeneous Supply 🔗

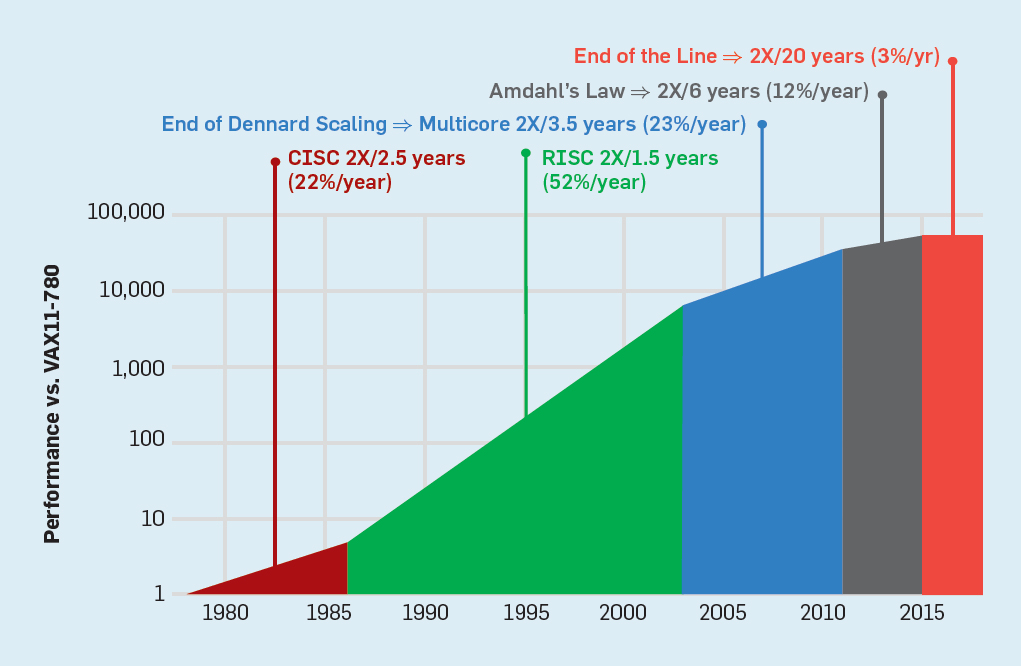

The fundamental reason why computers have become so significant to humanity now is because their performance has improved unrealistically over the past 50 years. As “predicted” by Moore, the co-founder of Intel, in 1965, the performance of computer chips has grown exponentially, making it possible to move beyond the era when every household had a personal computer to a world where each individual carries around a mobile one. Developers have enjoyed tremendous productivity gains by using human-friendly languages like Python without much hassle. This powerful growth has felt like a “law” for a full 50 years, but it was never guaranteed, and as shown below, since around 2015, it has shown a growth of a mere 3% per year.1

The advancement of hardware performance has not entirely stopped, but the old power that used to enhance all software automatically has definitely weakened. To fill this void, instead of general-purpose computing like CPUs, there has been a natural influx of special-purpose hardware that performs more limited tasks far better. Nvidia’s GPUs, Google’s TPUs, YouTube’s Argos, and startups like Furiosa are all good examples. Even within the realm of general-purpose CPUs, the industry landscape began to shift away from the previously dominant Intel x86 architecture towards more cost-effective options like AWS Graviton and GCP Tau to fill the power vacuum.

This massive wave hit the Big Tech first2, but it will affect everyone sooner or later. Additionally, revolutionary shifts are happening not just in computing but also in networks and storage. Moreover, to a comparable extent, cloud computing is providing further new possibilities on top of them. For example, Snowflake has introduced a data platform that utilizes the cloud to separate storage and computing. Various cases like this, which weave computing, networks, and storage in ways different from the past, will continue to emerge. Accordingly, the definition of a “computer” is changing at all levels, and the boundaries between them are getting blurry. Thus, we must accept a new heterogeneous world and let the old homogeneous x86 one fade away.

2. Increasingly Larger Demand 🔗

If the above supply issues had led to a decrease in demand, it wouldn’t have been very disruptive (though the value of development roles would have significantly decreased). However, we are truly living in the era of the software transition. The number of internet users has risen from less than a billion 20 years ago to now 5.3 billion3, and virtually everyone carries a smartphone, with every moment being transformed into digitalized photos and videos. The ability to effectively utilize such data to create value represents both an opportunity and a risk for everyone.

Every company is now a software company. – Satya Nadella

It’s not just the quantity of data that is increasing; the technology to understand this data is also advancing tremendously: from recognizing the presence of a leopard in an image in 2012 (AlexNet) to defeating a Go champion in 2016 (AlphaGo), to displaying creative and problem-solving abilities that are difficult to distinguish from humans in 2022 (GPT-4). Moreover, the demand for computing power to create such high performance is growing even faster than Moore’s Law4. While large language models (LLMs) like ChatGPT have recently received immense attention, recommendation systems like those used for advertising are still very much in demand5.

In addition to the amount of data and the size of computing necessary to process them, the speed at which data flows has increased substantially. For example, when a user shows interest in a product, services want to immediately use that information to further engage the user’s interest. In the past, data analysis itself was a novel concept, and even when it did exist, there was a significant delay between the time a user’s action occurred and when it was analyzed. However, now, there is an expectation for everything to happen instantaneously. Real-time stream processing increases the demand even more than offline data analysis, which could efficiently utilize idle computing resources.

3. Complexity and the Solution War 🔗

The two waves above strongly press the software from both sides, creating a new wave. The three giants that rank in the top five in global market capitalization fiercely compete in the public cloud (AWS, Azure, GCP). Around them, as shown below, numerous SaaS companies are emerging6. The expense on the public cloud will exceed half of the total IT budget only by 20257, so this era of intense competition with high uncertainty will continue for quite some time.

The fundamental reason for this chaos is that the problems software has to solve have become inherently more difficult due to the waves mentioned above (a.k.a., essential complexity8). The industry is responding by breaking down software into smaller units (microservices), introducing data-centric structures (data architecture), and forming organizations to handle these tasks (platform engineering). Still, this area will not become dramatically easier anytime soon.

-

Computing (microservice): Previously, a “backend” mainly consisted of a single process and a database (monolith), but now more evolved logic is being split into smaller units. Not only has the logic become more complex, but the opportunity for leveraging different hardware for each type of logic is also increasing (for example, ML inference can run better on GPUs). Due to this common industry demand, the overall technology for operating microservices is rapidly advancing. The ecosystem for deploying services (containers, serverless), managing them (Kubernetes, also known as k8s), and monitoring them (observability) has matured significantly over the past decade. In addition, best practices for efficiently utilizing these in products have also become better established (CD/CI, IaC, loose coupling).

-

Data (data architecture): Users used to have a one-way relationship with services where they simply consumed what was offered, and that backbone is still essential. For example, Amazon displays products that sellers have already registered. However, the data that flows around the user journey has also become very important. For instance, if a user has purchased bananas and a diet book, recommending a blender might encourage further purchases. Products added to the shopping cart once could be a more effective target for discount advertisements. This kind of personalization has started to impact product quality significantly, and naturally, solutions that record, analyze, and utilize the diverse behaviors of users while protecting their privacy are actively developing (Spark, Kafka, Flink, Snowflake, data catalog). Consequently, data that was once static has become much more dynamic, and strategies for effectively utilizing this data in ML are becoming important (feature store, vector database, data mesh, RAG, MLflow).

-

Org (platform engineering): While the two changes mentioned above are somewhat inevitable, without overall coordination, complexity can surge without yielding substantial benefits. Naturally, companies have introduced teams under various names to drive these changes (DevOps, Platform teams)9. These teams have the strategic task of riding the waves of change and continuously and efficiently introducing capabilities necessary for the company — it’s common to have a separate ML Platform, given the importance of ML as a separate topic. However, unlike the pretty ideal, reality is often much messier. Because it is hard to hide inherent complexity with simple abstractions, this complexity gradually leaks out. As a result, other teams may feel that systems have become unnecessarily complicated. Ultimately, introducing a single organizational unit cannot make a company efficient overnight (extending the concept of ’no silver bullet’8); it requires a collaborative culture, leadership, and development capabilities to work in harmony.

As the volatile stock market surrounding the above companies shows, no one can be sure what the landscape will look like in five years. This final wave is not merely ripples of the above two waves but a new wave in its own right because it has significantly accelerated the overall tempo of the software industry. Users’ expectations have risen, and the impact of software on business has grown; thus, how to manage this complexity more swiftly while delivering value is a core competency for both individuals and enterprises.

A high production rate solves many ills. – Elon Musk

4. Personal Examples 🔗

After over a decade in the software industry across different locations in Silicon Valley, Seattle, and South Korea, I observed that catching the wave often has a stronger influence on career development than individual capabilities. On the other hand, in places where the waves are weak, it appears challenging for even exceptionally talented individuals to utilize their abilities. After all, even the most skilled surfer can only tread water without waves. Here are three small personal examples.

4.a. Choosing a Team 🔗

I was with Google’s Borg team, the predecessor to Kubernetes, for over six years, from 2015 to 2022. Initially, I received a fair number of negative advice that I would only be doing maintenance rather than new work on a legacy project that started in 2003. Indeed, the team consisted of just about ten people, and even the most recent projects were not very attractive — perhaps that’s why it was easier to join the team without much relevant experience.

However, as Google needed to navigate through the waves above, there was a lot of work to be done on Borg, and by the time I left, the team had grown to about 100 people. Naturally, there were ample opportunities for impacts, and most folks who had been on the team before I did could be promoted (if they wanted) to ranks at Google that are typically challenging to reach. While each individual was talented and worked hard, these broad advancements were only possible with a strong wave.

4.b. Leading a Team 🔗

As mentioned in my previous writing about becoming an engineering manager, opportunity is the most critical condition for becoming a team leader. If a team of five remains a team of five under the same manager three years later, there may be opportunities to expand what you do, but there will inevitably be no chance to build a team. Of course, becoming a manager isn’t the only growth path, but that path is clearly blocked.

It is hard to imagine now, but just four years ago, Google Cloud offered almost only one type of VM — the N1 — and the E2 was added in March 2020; now, the options are much more diverse. In the end, Borg had to manage the much more heterogeneous servers of the cloud, and the argument for creating a proper team to manage all the looming projects was easy to accept. Due to Borg’s unique complexities, external hires were not easy to find, and naturally, I got opportunities to build and lead a team.

4.c. Identifying a Project 🔗

The next company, Quora, was the first place where I didn’t start as a junior. Since the company was small with few seniors, I had to start by identifying good projects. Despite this uncertainty, as I wrote above, I expected various opportunities in the ML Platform area.

The waves I summarized above immensely helped me navigate the path, and without knowing the company’s internal states in detail, I could still deduce structural problems. For example, since the company started in 2009, I assumed that how they operated ML wouldn’t effectively handle the heterogeneous supply while dealing with explosive and diverse demand. Then, I could easily find examples showing that cost or developer productivity was an issue, matching my suspicions – in contrast, I could have inductively found problems from instances, but as organizations have inertia, existing problems rarely scream to be discovered.

Of course, identifying problems is just the beginning: the effort is only meaningful if you can develop and implement solutions that fit the company’s reality and deliver results. However, you cannot create impacts without good projects, so uncovering good opportunities is especially important for career development. While others might hand over good projects, the ability to develop meaningful projects on one’s own becomes more important the more senior the role you have. The good news is that a company is a component of a larger system and, like individuals, is influenced by macro trends, so significant shifts can present opportunities.

5. Closing 🔗

My previous writing showed the compounding structure of one’s career growth. This time, the focus is on the driving force behind that compounding. It’s not about struggling alone; rather, we can go much further when we utilize the great external forces. As long as humanity exists, various waves will come and go, and by focusing on your wave, you might also avoid the exhausting competition with others – we can neither ride every wave nor do we need to.

If snowballing was a metaphor that showed a long-term perspective five years ago, wave riding is a better analogy for a short-term perspective10. In the long run, a snowball grows compounding, but you need different strategies and force adjustments in the short term depending on external factors and one’s situation. Not all growth is visible, so snowballing can seem overly idealized in the short term. For example, if I’m in a quiet sea, choosing a new position in a more active sea might be most important. Studying how others ride well, practicing on small waves, and developing my style are also meaningful growth. If you’re riding a fine wave, enjoy the present without distraction, but remember it’s important to move on to the next wave as the current one ends. ∎

-

YouTube: John Hennessy (Stanford University’s 10th president and a Turing recipient) – The Moore’s Law that we knew has ended. The graph is from the Turing Award lecture published in CACM. ↩︎

-

YouTube: Amin Vahdat (Google Infra VP) – How the next generation of computing will evolve. ↩︎

-

Visualization on Statista based on ITU’s data (a specialized agency of the UN). ↩︎

-

The graph below was taken from a report by EPOCH. They observed that until 2010, the computing demand for ML had progressed at a pace comparable to Moore’s Law. However, with the advent of the deep learning era, it started doubling every six months, and with the onset of the era of large models around 2015, this trend has continued. ↩︎

-

The paper on Google’s TPUv4 states that the demand for deep learning-based recommendation models still accounts for 24% of the total TPUv4 usage, which has not changed significantly from 27% three years ago. ↩︎

-

The source is from a SaaS-related newsletter called Clouded Judgement, operated by a VC named Jamin Ball. Beyond the list, there are also numerous private companies. ↩︎

-

Fred Brooks, a Turing Award winner who profoundly influenced software development theory, categorized complexity into two types: essential and accidental. Essential complexities don’t have simple solutions, and as the industry has evolved, most problems have become essential. More details are in his famous essay “No Silver Bullet.” ↩︎ ↩︎

-

Gartner is already addressing this area as one of the significant changes. ↩︎

-

Since all entities are both particles and waves, both concepts are meaningful. :) ↩︎